Asymptotic equipartition property

In information theory the asymptotic equipartition property (AEP) is a general property of the output samples of a stochastic source. It is fundamental to the concept of typical set used in theories of compression.

Roughly speaking, the theorem states that although there are many series of results that may be produced by a random process, the one actually produced is most probably from a loosely-defined set of outcomes that all have approximately the same chance of being the one actually realized. (This is a consequence of the law of large numbers and ergodic theory.) Although there are individual outcomes which have a higher probability than any outcome in this set, the vast number of outcomes in the set almost guarantees that the outcome will come from the set.

In the field of Pseudorandom number generation, a candidate generator of undetermined quality whose output sequence lies too far outside the typical set by some statistical criteria is rejected as insufficiently random. Thus, although the typical set is loosely defined, practical notions arise concerning sufficient typicality.

Contents |

Definition

Given a discrete-time stationary ergodic stochastic process  on the probability space

on the probability space  , AEP is an assertion that

, AEP is an assertion that

where  denotes the process limited to duration

denotes the process limited to duration  , and

, and  or simply

or simply  denotes the entropy rate of

denotes the entropy rate of  , which must exist for all discrete-time stationary processes including the ergodic ones. AEP is proved for finite-valued (i.e.

, which must exist for all discrete-time stationary processes including the ergodic ones. AEP is proved for finite-valued (i.e.  ) stationary ergodic stochastic processes in the Shannon-McMillan-Breiman theorem using the ergodic theory and for any i.i.d. sources directly using the law of large numbers in both the discrete-valued case (where

) stationary ergodic stochastic processes in the Shannon-McMillan-Breiman theorem using the ergodic theory and for any i.i.d. sources directly using the law of large numbers in both the discrete-valued case (where  is simply the entropy of a symbol) and the continuous-valued case (where

is simply the entropy of a symbol) and the continuous-valued case (where  is the differential entropy instead). The definition of AEP can also be extended for certain classes of continuous-time stochastic processes for which a typical set exists for long enough observation time. The convergence is proven almost sure in all cases.

is the differential entropy instead). The definition of AEP can also be extended for certain classes of continuous-time stochastic processes for which a typical set exists for long enough observation time. The convergence is proven almost sure in all cases.

AEP for discrete-time i.i.d. sources

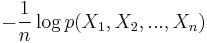

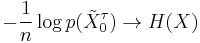

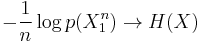

Given  is an i.i.d. source, its time series X1, ..., Xn is i.i.d. with entropy H(X) in the discrete-valued case and differential entropy in the continuous-valued case. The weak law of large numbers gives the AEP with convergence in probability,

is an i.i.d. source, its time series X1, ..., Xn is i.i.d. with entropy H(X) in the discrete-valued case and differential entropy in the continuous-valued case. The weak law of large numbers gives the AEP with convergence in probability,

since the entropy is equal to the expectation of  . The strong law of large number asserts the stronger almost sure convergence,

. The strong law of large number asserts the stronger almost sure convergence,

which implies the result from the weak law of large numbers.

AEP for discrete-time finite-valued stationary ergodic sources

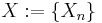

Consider a finite-valued sample space  , i.e.

, i.e.  , for the discrete-time stationary ergodic process

, for the discrete-time stationary ergodic process  defined on the probability space

defined on the probability space  . The AEP for such stochastic source, known as the Shannon-McMillan-Breiman theorem, can be shown using the sandwich proof by Algoet and Cover outlined as follows:

. The AEP for such stochastic source, known as the Shannon-McMillan-Breiman theorem, can be shown using the sandwich proof by Algoet and Cover outlined as follows:

- Let

denote some measurable set

denote some measurable set  for some

for some

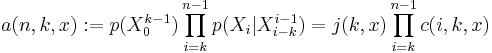

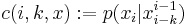

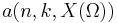

- Parameterize the joint probability by

and x as

and x as

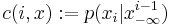

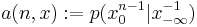

- Parameterize the conditional probability by

and

and  as

as  .

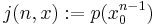

. - Take the limit of the conditional probability as

and denote it as

and denote it as

- Argue the two notions of entropy rate

![\lim_{n\to\infty} E[-\log j(n,X)]](/2012-wikipedia_en_all_nopic_01_2012/I/7a44ea5280d00c135495e0248e15340c.png) and

and ![\lim_{n\to\infty} E[-\log c(n,n,X)]](/2012-wikipedia_en_all_nopic_01_2012/I/98f605d21e6d5b8a00e865af33d958cb.png) exist and are equal for any stationary process including the stationary ergodic process

exist and are equal for any stationary process including the stationary ergodic process  . Denote it as

. Denote it as  .

. - Argue that both

and

and  , where

, where  is the time index, are stationary ergodic processes, whose sample means converge almost surely to some values denoted by

is the time index, are stationary ergodic processes, whose sample means converge almost surely to some values denoted by  and

and  respectively.

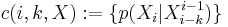

respectively. - Define the

-th order Markov approximation to the probability

-th order Markov approximation to the probability  as

as

- Argue that

is finite from the finite-value assumption.

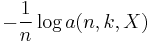

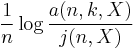

is finite from the finite-value assumption. - Express

in terms of the sample mean of

in terms of the sample mean of  and show that it converges almost surely to

and show that it converges almost surely to

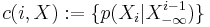

- Define

, which is a probability measure.

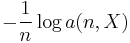

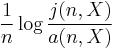

, which is a probability measure. - Express

in terms of the sample mean of

in terms of the sample mean of  and show that it converges almost surely to

and show that it converges almost surely to

- Argue that

as

as  using the stationarity of the process.

using the stationarity of the process. - Argue that

using the Lévy's martingale convergence theorem and the finite-value assumption.

using the Lévy's martingale convergence theorem and the finite-value assumption. - Show that

![E\left[\frac{a(n,k,X)}{j(n,X)}\right]=a(n, k,X(\Omega))](/2012-wikipedia_en_all_nopic_01_2012/I/2e49bbad358edcb847885e4ecb5d3b39.png) which is finite as argued before.

which is finite as argued before. - Show that

![E\left[\frac{j(n,X)}{a(n,X)}\right]=1](/2012-wikipedia_en_all_nopic_01_2012/I/f954b12abb3286340b093c3684039054.png) by conditioning on the infinite past

by conditioning on the infinite past  and iterating the expectation.

and iterating the expectation. - Show that

![(\forall \alpha\in\mathbb{R})\left(\Pr\left[\frac{a(n,k,X)}{j(n,X)}\geq \alpha \right]\leq \frac{a(n, k,X(\Omega))}{\alpha}\right)](/2012-wikipedia_en_all_nopic_01_2012/I/8f7065c4bdf037cb3f7276ee82404deb.png) using the Markov's inequality and the expectation derived previously.

using the Markov's inequality and the expectation derived previously. - Similarly, show that

![(\forall \alpha\in\mathbb{R})\left(\Pr\left[\frac{j(n,X)}{a(n,X)}\geq \alpha \right]\leq \frac{1}{\alpha}\right)](/2012-wikipedia_en_all_nopic_01_2012/I/74ef83c5ef643fe22dd473a24ee73267.png) , which is equivalent to

, which is equivalent to ![(\forall \alpha\in\mathbb{R})\left(\Pr\left[\frac1n\log\frac{j(n,X)}{a(n,X)}\geq \frac1n\log\alpha \right]\leq \frac{1}{\alpha}\right)](/2012-wikipedia_en_all_nopic_01_2012/I/ec8319776f1a99a4a060fccb8f4482d7.png) .

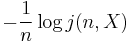

. - Show that

of both

of both  and

and  are non-positive almost surely by setting

are non-positive almost surely by setting  for any

for any  and applying the Borel-Cantelli lemma.

and applying the Borel-Cantelli lemma. - Show that

and

and  of

of  are lower and upper bounded almost surely by

are lower and upper bounded almost surely by  and

and  respectively by breaking up the logarithms in the previous result.

respectively by breaking up the logarithms in the previous result. - Complete the proof by pointing out that the upper and lower bounds are shown previously to approach

as

as  .

.

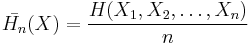

AEP for non-stationary discrete-time source producing independent symbols

The assumptions of stationarity/ergodicity/identical distribution of random variables is not essential for the AEP to hold. Indeed, as is quite clear intuitively, the AEP requires only some form of the law of large numbers to hold, which is fairly general. However, the expression needs to be suitably generalized, and the conditions need to be formulated precisely.

We assume that the source is producing independent symbols, with possibly different output statistics at each instant. We assume that the statistics of the process are known completely, that is, the marginal distribution of the process seen at each time instant is known. The joint distribution is just the product of marginals. Then, under the condition (which can be relaxed) that ![Var[Log[[p(X i)]]<M](/2012-wikipedia_en_all_nopic_01_2012/I/a4ac884a3d4e1426dd95c01f2841d5df.png) for all i, for some M>0, the following holds (AEP):

for all i, for some M>0, the following holds (AEP):

![\lim_{n\to\infty}\Pr\left[\left|-\frac{1}{n} \log p(X_1, X_2, ..., X_n) - \bar{H_n}(X)\right|< \epsilon\right]=1\qquad \forall \epsilon>0](/2012-wikipedia_en_all_nopic_01_2012/I/f9288f83e6b54cc5b875fc060b2a3274.png) where,

where,

- Proof

The proof follows from a simple application of Markov's inequality (applied to second moment of  .

.

![\Pr\left[\left|-\frac{1}{n} \log p(X_1, X_2, ..., X_n) - \bar{H}(X)\right|> \epsilon\right]\leq \frac{E[\sum_{i=1}^n [log(p(X_i)]^2]}{n^2\times \epsilon^2}\leq \frac{M}{n\times \epsilon^2} \rightarrow 0 \;\;\;\mbox{as}\;\;\; n\rightarrow\infty](/2012-wikipedia_en_all_nopic_01_2012/I/8b6ebaaf75e6bc2d49e828e57aabf16a.png)

It is obvious that the proof holds if any moment ![E[|log[[p(X i)]]|^r]](/2012-wikipedia_en_all_nopic_01_2012/I/271995f81b6caa51ac63b0f36ad6ea86.png) is uniformly bounded for

is uniformly bounded for  (again by Markov's inequality applied to rth moment).

(again by Markov's inequality applied to rth moment).

Even this condition is not necessary, but given a non-stationary random process, it should not be difficult to test whether the AEP holds using the above method.

Applications for AEP for non-stationary source producing independent symbols

The AEP for non-stationary discrete-time independent process leads us to (among other results) source coding theorem for non-stationary source (with independent output symbols) and channel coding theorem for non-stationary memoryless channels.

Source Coding Theorem

The source coding theorem for discrete time non-stationary independent sources can be found here: source coding theorem

Channel Coding Theorem

Channel coding theorem for discrete time non-stationary memoryless channels can be found here: noisy channel coding theorem

AEP for certain continuous-time stationary ergodic sources

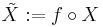

Discrete-time functions can be interpolated to continuous-time functions. If such interpolation  is measurable, we may define the continuous-time stationary process accordingly as

is measurable, we may define the continuous-time stationary process accordingly as  . If AEP holds for the discrete-time process, as in the i.i.d. or finite-valued stationary ergodic cases shown above, it automatically holds for the continuous-time stationary process derived from it by some measurable interpolation. i.e.

. If AEP holds for the discrete-time process, as in the i.i.d. or finite-valued stationary ergodic cases shown above, it automatically holds for the continuous-time stationary process derived from it by some measurable interpolation. i.e.  where

where  corresponds to the degree of freedom in time

corresponds to the degree of freedom in time  .

.  and

and  are the entropy per unit time and per degree of freedom respectively, defined by Shannon.

are the entropy per unit time and per degree of freedom respectively, defined by Shannon.

An important class of such continuous-time stationary process is the bandlimited stationary ergodic process with the sample space being a subset of the continuous  functions. AEP holds if the process is white, in which case the time samples are i.i.d., or there exists

functions. AEP holds if the process is white, in which case the time samples are i.i.d., or there exists  , where

, where  is the nominal bandwidth, such that the

is the nominal bandwidth, such that the  -spaced time samples take values in a finite set, in which case we have the discrete-time finite-valued stationary ergodic process.

-spaced time samples take values in a finite set, in which case we have the discrete-time finite-valued stationary ergodic process.

Any time-invariant operations also preserves AEP, stationarity and ergodicity and we may easily turn a stationary process to non-stationary without losing AEP by nulling out a finite number of time samples in the process.

See also

References

The Classic Paper

- Claude E. Shannon. A Mathematical Theory of Communication. Bell System Technical Journal, July/October 1948.

Other Journal Articles

- Paul H. Algoet and Thomas M. Cover. A Sandwich Proof of the Shannon-McMillan-Breiman Theorem. The Annals of Probability, 16(2): 899-909, 1988.

- Sergio Verdu and Te Sun Han. The Role of the Asymptotic Equipartition Property in Noiseless Source Coding. IEEE Transactions on Information Theory, 43(3): 847-857, 1997.

Textbooks on Information Theory

- Thomas M. Cover, Joy A. Thomas. Elements of Information Theory New York: Wiley, 1991. ISBN 0-471-06259-6

- David J. C. MacKay. Information Theory, Inference, and Learning Algorithms Cambridge: Cambridge University Press, 2003. ISBN 0-521-64298-1

![\lim_{n\to\infty}\Pr\left[\left|-\frac{1}{n} \log p(X_1, X_2, ..., X_n) - H(X)\right|> \epsilon\right]=0 \qquad \forall \epsilon>0.](/2012-wikipedia_en_all_nopic_01_2012/I/1cdbe986618f724240371d7e73350872.png)

![\Pr\left[\lim_{n\to\infty} - \frac{1}{n} \log p(X_1, X_2, ..., X_n) = H(X)\right]=1](/2012-wikipedia_en_all_nopic_01_2012/I/1302bf38b7d95ec14ed38472ae223112.png)